Hello fellas, this is the first time i actually type something to forums lol

As the title said, you might be already aware what am i going to talk, and what it is probably doing with Osu!.

First of all, i have read this user's guide to this part of forum, but i don't think my post is going to fit in Gameplay & Rankings forum, not even Beatmaps section, due to the nature of this post is actually "Suggestion for training data" and "Data Science". But still oddly Osu!-related somewhat so it does not fit in Off-topic either. But please let me know if there's more appropriate Osu! forum section.

So, basically, i've made an Artificial intelligence that learns how to make an Osu!Standard beatmap from songs. I have made the program to convert both beatmap and its song into the data format i need and also to reverse it to beatmap again. I already have some song and beatmaps to start 'teaching' the AI how to make another one, but since this is also the first time i develop something so complex like this, my AI is so limited to produce a good beatmap.

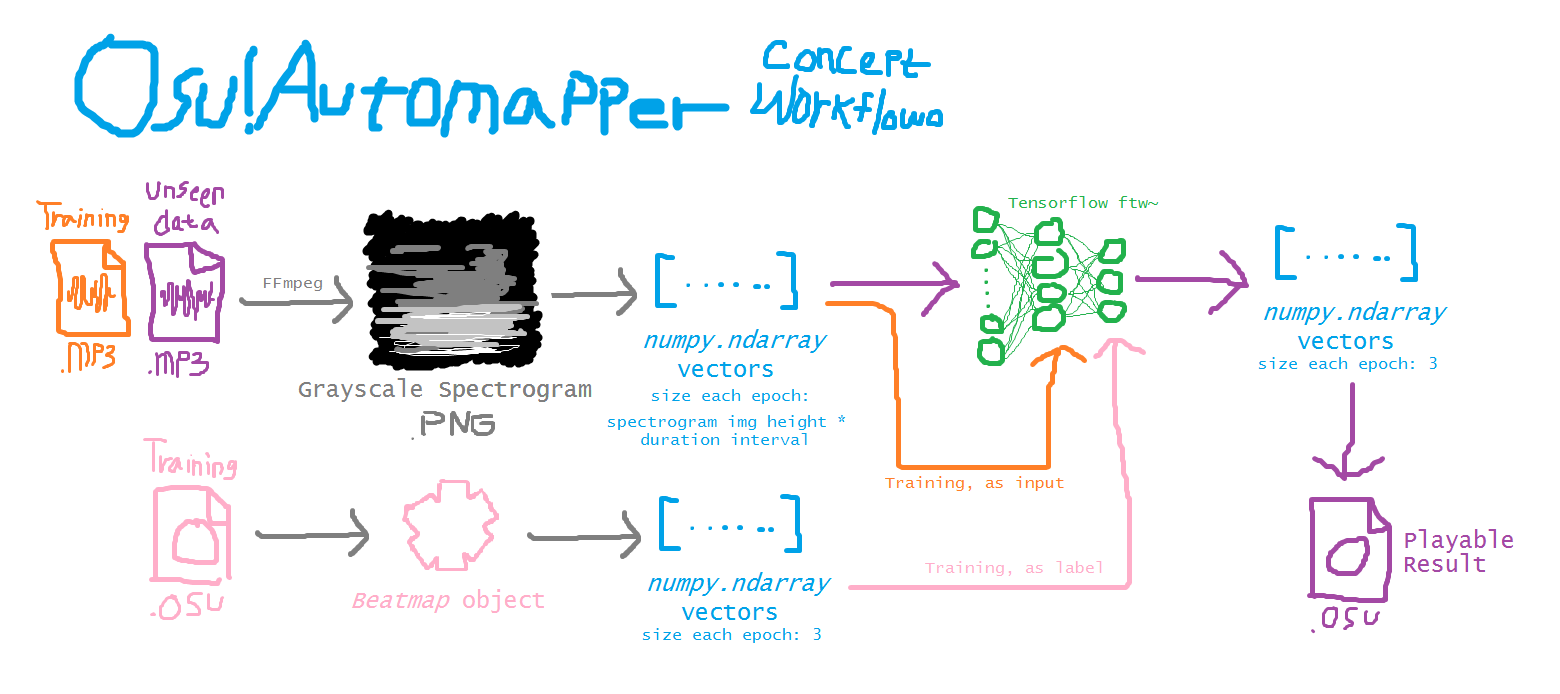

Here is how it works in a nutshell:

That limitation prevents me to feed almost all beatmap in my Osu! folder.

But with those limitations being present, it does not mean it will forever be like that, I'll try to improve so it will solve one limitation at a time and hopefully not generating new limitations. Anyway, according to the limitations i have, I need a suggestion for some beatmaps that is compatible to overcome those limitations. Here are my preferences, if possible:

And thus this post concludes ^_^

Thank you guys for just taking a peek or even contribute. I have no deliberate intentions to explicitly advertise my project, but i have to elaborate some parts of it to make sure my problems are more easily solved. Also, this project was never intended for public release because this is just a fun project, not really an endeavor to improve science at any point lol. But if you're interested to see the codes and stuff, take a visit to the github page - I warned you: so messy, first time making a solo project and using git.

Again, thanks!

As the title said, you might be already aware what am i going to talk, and what it is probably doing with Osu!.

tl;dr,

I'm making an AI that learns how to map a beatmap and will vomit raw beatmaps if i try to forcefeed it with .mp3 songs. I need help from you guys to find a proper beatmap to train the AI so it is doing it's job correctly and no longer be spanked by me.

First of all, i have read this user's guide to this part of forum, but i don't think my post is going to fit in Gameplay & Rankings forum, not even Beatmaps section, due to the nature of this post is actually "Suggestion for training data" and "Data Science". But still oddly Osu!-related somewhat so it does not fit in Off-topic either. But please let me know if there's more appropriate Osu! forum section.

So, basically, i've made an Artificial intelligence that learns how to make an Osu!Standard beatmap from songs. I have made the program to convert both beatmap and its song into the data format i need and also to reverse it to beatmap again. I already have some song and beatmaps to start 'teaching' the AI how to make another one, but since this is also the first time i develop something so complex like this, my AI is so limited to produce a good beatmap.

...or in nerd language:

I've made a Neural Network using TensorFlow in python (Anaconda tho) that is designed to map a beatmap by given song. The data wrapper I've made turns one song into training-sized pieces of grayscale frequency spectrogram that covers some duration interval (detailed down to one milisecond / 0.001 sec) using FFmpeg as labels that are synced to the song that is also cut down to pieces in the same duration interval. The data fed and spitted into the machine are NumPy vectors (one-dimensional ndarray, but stacked down lol). But since i know TensorFlow accepts an actual one-dimensional ndarray, i feel relieved.

example for one training epoch:

Input (song): [0, 0, 0, 10, 68, 210, 256, 256, ... ]

>>> size = height of the spectrogram image * the duration interval chosen

Output/label (beatmap): [1, 0, 0]

>>> size = 3, representing either a circle, slider, or spinner presence respectively during current duration interval

Despite the fact that I actually made this far, this is one of my first experience wandering in the wilderness of Machine Learning after I finished the Machine Learning Introduction course last semester in college. So I won't expect much from the AI, but at least I want to try something i have learned before and actually use it for real life implementations.

example for one training epoch:

Input (song): [0, 0, 0, 10, 68, 210, 256, 256, ... ]

>>> size = height of the spectrogram image * the duration interval chosen

Output/label (beatmap): [1, 0, 0]

>>> size = 3, representing either a circle, slider, or spinner presence respectively during current duration interval

Despite the fact that I actually made this far, this is one of my first experience wandering in the wilderness of Machine Learning after I finished the Machine Learning Introduction course last semester in college. So I won't expect much from the AI, but at least I want to try something i have learned before and actually use it for real life implementations.

Here is how it works in a nutshell:

That limitation prevents me to feed almost all beatmap in my Osu! folder.

Those limitations are:

- Timing points, most of the maps has complex timing point settings that my stupid AI cannot handle it. Since i choose interval number to cut maps to pieces of certain miliseconds, there is a possibility that a combination of at least two of circle, slider, and spinner occurring during that interval. The problem is caused by either a new inherited timing point (the tp that defines its BPM by number instead of % of previous inherited timing point) has different BPM from previous section or put on a certain timestamp that breaks the previous 'beat' cycle. Different BPM bothers me, because my AI can only produce a map with one, uniform BPM.

- most of them aren't long enough to properly teach my AI. Marathon maps are good solutions at the first glance, but it turns not all of them. Half of marathon songs are compilations, like Sotarks' Fhána compilation is a good example to play, but because compilation consisted of songs with different BPM I heavy-heartedly throw this beatmap out of the list. This nano.RIPE compilation even traumatized my AI even more, MADs and meme remixes are the worst for him. Sometimes, to make your AI better is to feed more data to it.

- Vocals are not instruments you twat, but, y'know, my AI cannot yet separate vocals and instruments. There are some vocals that sometimes can be wrongly interpreted as instruments and it will effect on how my AI learn.

- Songs that are either too loud, has ridiculously high BPM, sounds chaotic, or has moive SFX (like boom or weeeeeeee bang bang bang skreeee, unless if it is used in a rhythmical manner) in it will affect on how the AI learning when should it put hit objects. It could have put an unnecessary insane stream on Peer Gynt's "Morning".

But with those limitations being present, it does not mean it will forever be like that, I'll try to improve so it will solve one limitation at a time and hopefully not generating new limitations. Anyway, according to the limitations i have, I need a suggestion for some beatmaps that is compatible to overcome those limitations. Here are my preferences, if possible:

- Has a small ratio of the number of Timing Points to the duration of the beatmap, or at least at a consistent BPM. The lesser Timing Points it has, the better.

- Preferably has a long duration, >6 minutes if possible.

- Less vocals, the better, instruments only is the best.

- The song is Not too loud or has any other auditory harms, and also the beatmap does not feature too much streams or any close-proximity objects (in the perspective of time), only if possible.

And thus this post concludes ^_^

Thank you guys for just taking a peek or even contribute. I have no deliberate intentions to explicitly advertise my project, but i have to elaborate some parts of it to make sure my problems are more easily solved. Also, this project was never intended for public release because this is just a fun project, not really an endeavor to improve science at any point lol. But if you're interested to see the codes and stuff, take a visit to the github page - I warned you: so messy, first time making a solo project and using git.

Again, thanks!

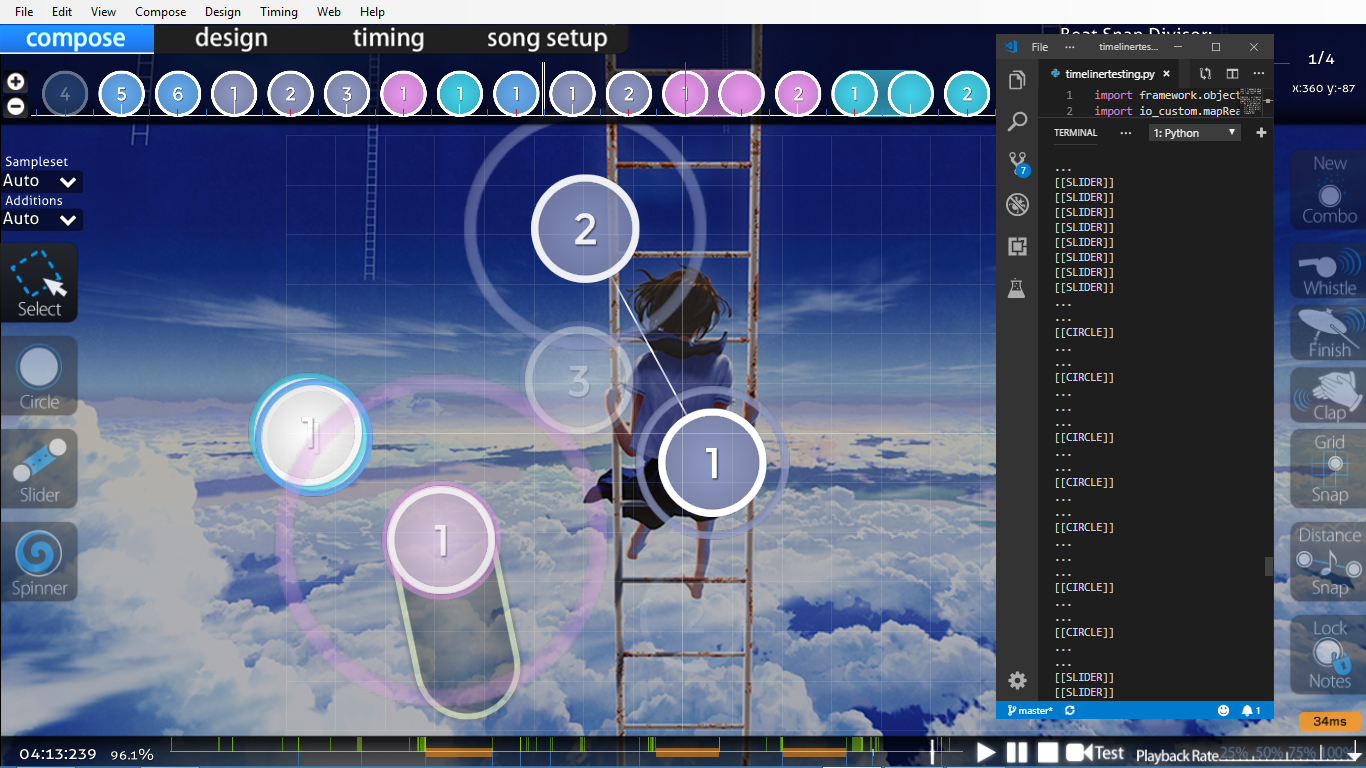

Bonus: screenshot Osu!Automapper debug program, trying to interpret the objects appearing at current time synced with the actual map playing

Osu!Automapper debug program, trying to interpret the objects appearing at current time synced with the actual map playing