Want to become musically inclined via the art of composition but don't know where to start? Here is a compilation of resources to both get you started and further improve upon your work. As you can probably tell, this concept is derivative of boat's lovely visual arts thread. I'm not sure how much interest there is for this topic on osu!, so I have put some time into outlining the basics for now. I will add more if there is much desire for it in the community.

Materials:

Q: What software can I use to create music?

The primary software used in making music digitally is called a DAW (Digital Audio Workstation). This is an all-encompassing tool that can be used to write, record, mix and master. Within the interface, sound is generated primarily through two methods: recorded audio and synthesis (more on this later). DAWs are effectively interchangable tools as all modern ones have compatibility for wav for recorded audio and VST (Virtual Studio Technology) for synthesis and effects. The main thing that sets them apart is the interface, the bundled effects and samples and, of course, the price. Pro Tools is the current standard in studios and has been for some time now, but the cost isn't exactly friendly for the beginner, currently at $699 online. To someone starting out, I recommend Reaper or FL Studio as both programs have fairly unrestricted trial editions. Of course, if you have no qualms with piracy, this isn't an issue. Other popular DAWs include Logic, Reason, Ableton Live and Cubase.

Q: I don't like these modern interfaces. What should I use if I want to write with traditional western music notation?

Sibelius and Finale are the big names in this area. A decent free alternative I've found is MuseScore. Guitar Pro is an option if you are one of those people who need to use guitar tablature.

Q: What is this MIDI I keep hearing about?

MIDI (Musical Instrument Digital Interface) is a standard that allows otherwise unrelated musical hardware and software to interact. It has been commonplace since the 80s and is unlikely to change soon. A common misconception about MIDI is that it's tinny audio you get from old video games and crappy bootleg karaoke. MIDI does not actually have any sound, it simply records parameters such as note length, note volue and pitch. If you were to import a MIDI file into a DAW, you would have full access to the notes of all instruments. Because of this, it's a very useful way for collaborators using different DAWs to work together as they can send these small files back and forth easily.

Getting started:

Q: Okay, so I chose a DAW and I got it all set up and running. Now what?

You painstakingly learn how to control the beast. It's not that bad, I promise. Depending on your preferred method of learning, you can focus on reading the documentation, watching relevant video tutorials or simply playing about with it. For whichever DAW you use, before attempting any real songwriting, I recommend at the very least learning how to insert a virtual instrument, add a wav sample, program loops and apply effects to a channel.

Update Log:

22/03/13 - Added mixing 101, further reading and additional software sections.

23/03/13 - Added GNU Solfege to the additional software section, added compressors and delay to the mixing 101 section.

24/11/13 - Added beatmatching for dummies

Materials:

Q: What software can I use to create music?

The primary software used in making music digitally is called a DAW (Digital Audio Workstation). This is an all-encompassing tool that can be used to write, record, mix and master. Within the interface, sound is generated primarily through two methods: recorded audio and synthesis (more on this later). DAWs are effectively interchangable tools as all modern ones have compatibility for wav for recorded audio and VST (Virtual Studio Technology) for synthesis and effects. The main thing that sets them apart is the interface, the bundled effects and samples and, of course, the price. Pro Tools is the current standard in studios and has been for some time now, but the cost isn't exactly friendly for the beginner, currently at $699 online. To someone starting out, I recommend Reaper or FL Studio as both programs have fairly unrestricted trial editions. Of course, if you have no qualms with piracy, this isn't an issue. Other popular DAWs include Logic, Reason, Ableton Live and Cubase.

Q: I don't like these modern interfaces. What should I use if I want to write with traditional western music notation?

Sibelius and Finale are the big names in this area. A decent free alternative I've found is MuseScore. Guitar Pro is an option if you are one of those people who need to use guitar tablature.

Q: What is this MIDI I keep hearing about?

MIDI (Musical Instrument Digital Interface) is a standard that allows otherwise unrelated musical hardware and software to interact. It has been commonplace since the 80s and is unlikely to change soon. A common misconception about MIDI is that it's tinny audio you get from old video games and crappy bootleg karaoke. MIDI does not actually have any sound, it simply records parameters such as note length, note volue and pitch. If you were to import a MIDI file into a DAW, you would have full access to the notes of all instruments. Because of this, it's a very useful way for collaborators using different DAWs to work together as they can send these small files back and forth easily.

Getting started:

Q: Okay, so I chose a DAW and I got it all set up and running. Now what?

You painstakingly learn how to control the beast. It's not that bad, I promise. Depending on your preferred method of learning, you can focus on reading the documentation, watching relevant video tutorials or simply playing about with it. For whichever DAW you use, before attempting any real songwriting, I recommend at the very least learning how to insert a virtual instrument, add a wav sample, program loops and apply effects to a channel.

Basic music theory

Music can essentially be divided into these concepts: Rhythm, melody, harmony, timbre. I will go into detail of each of these.

Rhythm:

Patterns in time essentially. The two things that define rhythm in music are time signatures and note values. A time signature is a musical device that dictates how many beats will be in a bar and the feel to an extent. Most typical songs use a single time signature without shifting, though it's not uncommon to see changes either depending on the genre. The most commonly used time signature is 4/4, also appropriately named common time. It contains four crotchets beats per bar.

Leaving the concept of a crotchet until a bit later, what is the significance of the bar? The bar contains notes that fit within one cycle of a time signature. For example, in 4/4, a bar could contain four crotchet beats or the equivalent with different note types. I'm sure you've heard this pattern a thousand times in dance and pop music with four monotonous bass drum hits in a row throughout the song. The importance of the bar becomes clear when you take note of when new patterns occur in a 4/4 song. Let's use a song that everyone in the osu! community will know: Bad Apple!!. Please follow along with the analysis and count out each crotchet beat: ONE two three four. Do note that the video provided is at a different speed to the song, so you won't be able to use it to follow along.

Bad Apple!! is undoubtedly in 4/4. It begins with 8 bars of a drum loop before transitioning into an 8 bar riff. After that, the verse begins. It plays for 8 bars before looping and adding backing vocals and a guitar thus extending the verse to a total of 16 bars. The next section contains a new melody, but still lasts for 8 bars; I'm going to refer to it as a chorus due to how it's the most repeated element. It loops in the same fashion as the verse, but adds heavy cymbals instead. After that, the chorus repeats into an interlude with the melody persisting but the other instruments disappearing from the song. The interlude goes for 8 bars before looping and adding drums for 8 bars. After that, the song begins again from the riff; elements are changed up a bit, but the structure remains largely the same, so I won't go into it. So, to help clarify, here is the structure of Bad Apple!!:

Intro: 8 bars

Riff: 8 bars

Verse A: 8 bars

Verse B: 8 bars

Chorus A: 8 bars

Chorus B: 8 bars

Interlude A: 8 bars

Interlude B: 8 bars

As you can see, this song is very linear in how it progresses with each section being divided into 8 bars. Bad Apple!! contains several techniques used to signal the start of a new section: The intro ends with a reversed cymbal as well as a standard one, sharply halting the song momentarily. The riff ends with a drum fill (as defined by Scott Schroedl as "A short break in the groove--a lick that 'fills in the gaps' of the music and/or signals the end of a phrase. It's kind of like a mini-solo."). These techniques are important in rhythm and song structure as they signal the next section. Without them, the changes in the song would seem more abrupt and less flowing.

Harmony:

When two or more notes play at the same time. Harmony can be divided into two different feelings: consonance and dissonance. Consonance makes notes sound related and together while dissonance makes notes clash. The distance between notes is called an interval and the length of the interval is what defines how consonant a sound is. From smallest to largest, here are the intervals within an octave.

Perfect unison (0 semitones apart)

Minor second (1 semitone apart)

Major second (2 semitones apart)

Minor third (3 semitones apart)

Major third (4 semitones apart)

Perfect fourth (5 semitones apart)

Tritone (6 semitones apart)

Perfect fifth (7 semitones apart)

Minor sixth (8 semitones apart)

Major sixth (9 semitones apart)

Minor seventh (10 semitones apart)

Major seventh (11 semitones apart)

Perfect octave (12 semitones apart)

This list is technically infinite as the pattern is recursive. From then on is minor ninth, major ninth, minor tenth, major tenth and so forth. What is important to realize from this, however, is the level of consonance of each of these interval types. From most to least is perfect, major, minor and tritone. However, certain intervals in the same category are more consonant than others. For example, a minor second is more dissonant than a major second.

By the way, a semitone (also known as a half step) is the smallest difference between two notes that can typically occur in western music. If you play every note in sequence, you form what is called a chromatic scale. For reference, these notes are C, C#, D, D#, E, F, F#, G, G#, A, A# and B. Note that I did not specify B# or E#; these notes do not exist typically and when they do, it is to do with grammar, a concept somewhat irrelevant to a beginner. What is vital for any musician to know is that the distance between E and F is not the same as the distance between C and D. A piano keyboard illustrates this concept well. As E and F don't have a black key between them, the distance apart is 1 semitone. C and D do have a black key between them, so the distance apart is 2 semitones. As you can see from the image, sharp notes also have equivalents as flats. This is due to the definition of the terms: to sharpen a note is to raise it a semitone, to flatten a note is to lower it by a semitone.

If you are the more adventurous type, you may now be thinking "I want to know what music sounds like if these missing black keys existed". It is a well explored concept both outside of and within western music known as microtonal music, where the smallest distance between notes is less than a semitone. This will not be covered here as it's too much of a niche.

Timbre:

This is an instrument's quality, its sound. The most basic way of examining timbre is to look at fundamental frequencies and overtones. In relation to this, each note has an associated frequency to produce it. This chart shows it well. You may notice that the same note an octave up (for example, comparing A4 and A5) has exactly double the frequency of the other note; this is not a coincidence and is important in realizing how we perceive notes. When a note is playing on a piano, A4 for example, you will perceive the fundamental frequency of 440Hz if you have a trained ear. What is not so apparent is that there are many, many other frequencies being produced on top of the fundamental frequency. These are known as overtones and harmonics. Above all, the patterns of them are what give an instrument its flavour.

I have used hertz to define a note, but I haven't actually defined what it is. Hertz is how many cycles something makes per second. The faster a waveform oscillates (or "loops"), the higher it will sound in pitch. To demonstrate this, I have generated two waveforms, one at 220Hz and the other at 110Hz

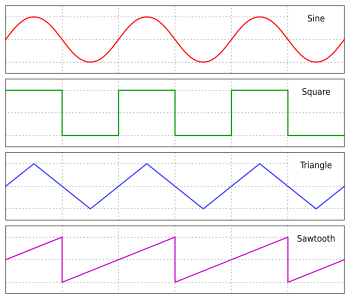

As you can see, the waveform at 220Hz is oscillating twice as quickly as the one at 110Hz. The shape of the waveform here is also important to note; this particular shape is known as a sine wave. The sound of a sine wave is very thin as it has literally no harmonics or overtones when generated authentically. Other common shapes include triangle, square and saw as pictured below.

These sorts of unaltered shapes are iconic with older video games as the technology didn't allow for much processing to be done, thus producing a rather harsh, unrealistic sound. Modern synthesizers, both hardware and digital, often rely on these basic shapes to form sounds though altered heavily with a variety of tools.

Melody:

Coming soon!(?)

Rhythm:

Patterns in time essentially. The two things that define rhythm in music are time signatures and note values. A time signature is a musical device that dictates how many beats will be in a bar and the feel to an extent. Most typical songs use a single time signature without shifting, though it's not uncommon to see changes either depending on the genre. The most commonly used time signature is 4/4, also appropriately named common time. It contains four crotchets beats per bar.

Leaving the concept of a crotchet until a bit later, what is the significance of the bar? The bar contains notes that fit within one cycle of a time signature. For example, in 4/4, a bar could contain four crotchet beats or the equivalent with different note types. I'm sure you've heard this pattern a thousand times in dance and pop music with four monotonous bass drum hits in a row throughout the song. The importance of the bar becomes clear when you take note of when new patterns occur in a 4/4 song. Let's use a song that everyone in the osu! community will know: Bad Apple!!. Please follow along with the analysis and count out each crotchet beat: ONE two three four. Do note that the video provided is at a different speed to the song, so you won't be able to use it to follow along.

Bad Apple!! is undoubtedly in 4/4. It begins with 8 bars of a drum loop before transitioning into an 8 bar riff. After that, the verse begins. It plays for 8 bars before looping and adding backing vocals and a guitar thus extending the verse to a total of 16 bars. The next section contains a new melody, but still lasts for 8 bars; I'm going to refer to it as a chorus due to how it's the most repeated element. It loops in the same fashion as the verse, but adds heavy cymbals instead. After that, the chorus repeats into an interlude with the melody persisting but the other instruments disappearing from the song. The interlude goes for 8 bars before looping and adding drums for 8 bars. After that, the song begins again from the riff; elements are changed up a bit, but the structure remains largely the same, so I won't go into it. So, to help clarify, here is the structure of Bad Apple!!:

Intro: 8 bars

Riff: 8 bars

Verse A: 8 bars

Verse B: 8 bars

Chorus A: 8 bars

Chorus B: 8 bars

Interlude A: 8 bars

Interlude B: 8 bars

As you can see, this song is very linear in how it progresses with each section being divided into 8 bars. Bad Apple!! contains several techniques used to signal the start of a new section: The intro ends with a reversed cymbal as well as a standard one, sharply halting the song momentarily. The riff ends with a drum fill (as defined by Scott Schroedl as "A short break in the groove--a lick that 'fills in the gaps' of the music and/or signals the end of a phrase. It's kind of like a mini-solo."). These techniques are important in rhythm and song structure as they signal the next section. Without them, the changes in the song would seem more abrupt and less flowing.

Harmony:

When two or more notes play at the same time. Harmony can be divided into two different feelings: consonance and dissonance. Consonance makes notes sound related and together while dissonance makes notes clash. The distance between notes is called an interval and the length of the interval is what defines how consonant a sound is. From smallest to largest, here are the intervals within an octave.

Perfect unison (0 semitones apart)

Minor second (1 semitone apart)

Major second (2 semitones apart)

Minor third (3 semitones apart)

Major third (4 semitones apart)

Perfect fourth (5 semitones apart)

Tritone (6 semitones apart)

Perfect fifth (7 semitones apart)

Minor sixth (8 semitones apart)

Major sixth (9 semitones apart)

Minor seventh (10 semitones apart)

Major seventh (11 semitones apart)

Perfect octave (12 semitones apart)

This list is technically infinite as the pattern is recursive. From then on is minor ninth, major ninth, minor tenth, major tenth and so forth. What is important to realize from this, however, is the level of consonance of each of these interval types. From most to least is perfect, major, minor and tritone. However, certain intervals in the same category are more consonant than others. For example, a minor second is more dissonant than a major second.

By the way, a semitone (also known as a half step) is the smallest difference between two notes that can typically occur in western music. If you play every note in sequence, you form what is called a chromatic scale. For reference, these notes are C, C#, D, D#, E, F, F#, G, G#, A, A# and B. Note that I did not specify B# or E#; these notes do not exist typically and when they do, it is to do with grammar, a concept somewhat irrelevant to a beginner. What is vital for any musician to know is that the distance between E and F is not the same as the distance between C and D. A piano keyboard illustrates this concept well. As E and F don't have a black key between them, the distance apart is 1 semitone. C and D do have a black key between them, so the distance apart is 2 semitones. As you can see from the image, sharp notes also have equivalents as flats. This is due to the definition of the terms: to sharpen a note is to raise it a semitone, to flatten a note is to lower it by a semitone.

If you are the more adventurous type, you may now be thinking "I want to know what music sounds like if these missing black keys existed". It is a well explored concept both outside of and within western music known as microtonal music, where the smallest distance between notes is less than a semitone. This will not be covered here as it's too much of a niche.

Timbre:

This is an instrument's quality, its sound. The most basic way of examining timbre is to look at fundamental frequencies and overtones. In relation to this, each note has an associated frequency to produce it. This chart shows it well. You may notice that the same note an octave up (for example, comparing A4 and A5) has exactly double the frequency of the other note; this is not a coincidence and is important in realizing how we perceive notes. When a note is playing on a piano, A4 for example, you will perceive the fundamental frequency of 440Hz if you have a trained ear. What is not so apparent is that there are many, many other frequencies being produced on top of the fundamental frequency. These are known as overtones and harmonics. Above all, the patterns of them are what give an instrument its flavour.

I have used hertz to define a note, but I haven't actually defined what it is. Hertz is how many cycles something makes per second. The faster a waveform oscillates (or "loops"), the higher it will sound in pitch. To demonstrate this, I have generated two waveforms, one at 220Hz and the other at 110Hz

As you can see, the waveform at 220Hz is oscillating twice as quickly as the one at 110Hz. The shape of the waveform here is also important to note; this particular shape is known as a sine wave. The sound of a sine wave is very thin as it has literally no harmonics or overtones when generated authentically. Other common shapes include triangle, square and saw as pictured below.

These sorts of unaltered shapes are iconic with older video games as the technology didn't allow for much processing to be done, thus producing a rather harsh, unrealistic sound. Modern synthesizers, both hardware and digital, often rely on these basic shapes to form sounds though altered heavily with a variety of tools.

Melody:

Coming soon!(?)

Mixing 101

Firstly, to clarify exactly what mixing is. Mixing is not mastering; mastering is the step taken after the mix is complete. Mastering is the final step before release and it involves minute changes on the songs themselves to alter loudness, frequency balance and whatever else is deemed necessary for the project. While mixing is typically done with multiple tracks, the mastering process traditionally involves applying changes to the track as a whole without any adjustment to individual pieces. Mastering is never performed before mixing. Mixing follows the recording (or programming and writing out) of a song and involves the altering of each recorded part with a generally wider range of tools than is feasible with mastering. That being said, mixing can be done as parts are recorded, though the idea is to consider the whole song. Now then, to discuss the more common tools we have.

Equalizers:

I'm sure you've used one of these in a car or on an mp3 player. Equalizers are used to alter frequency content of a sound. While their application is somewhat limited in a home environment, they are a vital tool while mixing in order to accomplish various things. These include making room for more instruments, giving sounds a different character, cutting out noise and achieving a more balanced sound. Most equalizers have the same standard set of filter types, so I'll be going into them using Fruity Parametric EQ 2 as a visual aid. It presents the frequency data in a horizontal view that's pretty easy to visualize.

High pass: Cuts off everything to the lower end of where you set it. It's standard practice to use this on as many tracks as you can get away with when you want to give your bassline extra prominence.

Low pass: The opposite of a high pass, this cuts off everything to the higher end of where you set it.

Low shelf: Similar to a high pass, but instead of cutting out everything, you can set a reduction of volume instead.

High shelf: Again, the opposite of a low shelf and equivalent to a low pass. Not much to say about it.

Peaking: Perhaps the most important of the EQ filters, this is used to boost or reduce a chosen frequency range. The range that I've boosted in the screenshot is a particularly special one; human ears are rather sensitive around the 5KHz range. If an instrument needs a little more presence in a song, it's not a bad idea to try boosting that range a little bit. Be warned that it can sound very piercing when overdone.

Band pass: Bit of an oddball here; this cuts all frequencies but the selected area.

Band stop: The final filter type in this particular plug-in. Band stop is used to cut out a frequency range entirely unlike peaking which has a -18dB limit. I've not found much use for this, personally; mainly for cutting out annoying harmonics and very thin noise. And speaking of thin, I should mention that another feature of this particular equalizer and many others is that you can adjust the width of a filter to suit your needs. For example, I can make the band stop extremely wide.

Though I'm almost certain I will never want to do this particular equalization, the width controls are very useful for precision. So, in conclusion, a simple word of advice: try to cut out frequencies before you decide to boost any. This is very common advice for the good reason that it helps things sound more natural. While the 5KHz boost is useful for example, you may find better results in certain circumstances by working to make a piece fit without it.

Compressors:

Not to be confused with data compression (such as the types used in mp3 and jpg files), this more commonly refers to dynamic range compressors. Dynamic range is how much difference there is between quiet and loud. Dynamic range compressors reduce this by pushing down the loud parts. This is primarily used to make excessively dynamic performances more steady or to give a part more "punch" as a byproduct of the compression process. Hearing compression applied to percussion should best demonstrate how it gives punch to hits. As with most kinds of audio processing, the extreme settings of the compressors are often abused and can make for weaker, crushed, unnatural sounds. This video demonstrates that well. The loudness war is typically something instigated in the mastering process, not mixing. A process called limiting where a compressor is used at a high ratio (more on ratios later) on the final mix is where the crushed sound comes from. Limiting is not an inherently evil thing and although I encourage you to learn how to use it while mastering, always be aware of the dangers. Now, to discuss the common settings of compressors.

Threshold: A setting going from ~-60dB to 0dB. It determines at which volume the compressor will begin acting on. If, for example, your instrument part is only reaching -20dB and you have the threshold set to -10dB, the compressor will do nothing regardless of what the other settings are.

Ratio: Determines how much compression will be applied. Wikipedia's example explains this best: "a ratio of 4:1 means that if input level is 4 dB over the threshold, the output signal level will be 1 dB over the threshold. The gain (level) has been reduced by 3 dB." Compressor settings range from 1:1 to a ratio around or above 30:1. When the ratio is infinity:1 or a very high setting that's effectively the same as infinity:1, it is known as limiting; limiting is used in the mastering process to achieve loudness and coherence. It is also useful while mixing to limit the master channel using a threshold of 0dB as this will prevent any very loud noises set up by mistake from damaging speakers and ears or simply shocking you.

Attack and release: These are used to determine how quickly the compression will take effect upon a sound passing the threshold and how quickly it will stop taking effect. Attack and release work by fading in and out the compression effect by setting a number in milliseconds. Having a slower attack helps preserve transients, the high volume beginning to a waveform. This diagram helps explain the significance of attack and release.

Gain: Since using compression reduces both volume and dynamic range, gain is a typical tool on compressors to compensate for the loss in volume. As usual, it's measured in dB.

Knee type: Used to add a variable element to the compression. A hard knee is the default type and doesn't affect the sound. A softer knee applies compression gradually as the sound comes closer to the threshold. For a more practical example, Joe Gilder has a good explanation.

Compressors are perhaps the most misunderstood and confusing tools for new producers. I suggest you become familiar with them early on as they are pretty important regardless of what music you're making. If everything else is going over your head, do your best to understand threshold and ratio as they are the most fundamental aspects of compression.

Delay:

As the name suggests, this process involves taking a sound and then playing it back again after a delay, usually multiple times in sequence. Delay plugins can get pretty complicated, so I'll keep this to the most simple of functions.

Time: This setting affects when each echo will play. Most digital delays have a time function based on the BPM of the project. These are displayed in an unusual way, in an x:yz format. For example, 4:00 is equivalent to having an echo play a crotchet beat after the initial sound while 2:00 plays the echoes twice as quickly. It's best to just hear these

Feedback mode: Decides how the echoes will operate on a stereo level. There are three basic types: normal, inverted and ping pong. The normal setting makes the echoes retain the stereo position of the orginal sound. In the example, the piano is 100% to the right, so on the normal setting, the echoes will all be 100% to the right as well. The inverted setting, however, makes the echoes the opposite of the original sound. Here, it means that the original sound will play at 100% to the right, but the echoes will all be 100% to the left. Finally, ping pong alternates between inverted and normal modes for each echo. In this case, the first echo will be 100% to the left, the second 100% to the right and so on.

Also in the feedback section is usually some parameters to control the amount of echoes and how quickly they fade out. This varies depending on the delay, but it should be rather simple to figure out. As usual, check the documentation if you're lost.

Wetness: More terminology to learn. Thankfully, wetness is rather simple; it is how much of a processed sound is applied. At 0% wetness, the delay effect will be silent. At 100%, the echoes will begin as loud as the initial sound. Wetness is a parameter you can control in virtually any plugin; any DAW worth its salt will let you adjust it in its interface somehow anway.

Now that we know how to control delay, exactly what is it used for? I'm sure you've heard it countless times in songs from the more subtle usage to the obnoxious. Delay is used to create texture with dynamics and also to suggest rhythm. With the ping pong and inverted settings, it can be used to help widen the stereo field without necessarily adding more instruments. Delay is also related to other effects such as reverb.

Equalizers:

I'm sure you've used one of these in a car or on an mp3 player. Equalizers are used to alter frequency content of a sound. While their application is somewhat limited in a home environment, they are a vital tool while mixing in order to accomplish various things. These include making room for more instruments, giving sounds a different character, cutting out noise and achieving a more balanced sound. Most equalizers have the same standard set of filter types, so I'll be going into them using Fruity Parametric EQ 2 as a visual aid. It presents the frequency data in a horizontal view that's pretty easy to visualize.

High pass: Cuts off everything to the lower end of where you set it. It's standard practice to use this on as many tracks as you can get away with when you want to give your bassline extra prominence.

Low pass: The opposite of a high pass, this cuts off everything to the higher end of where you set it.

Low shelf: Similar to a high pass, but instead of cutting out everything, you can set a reduction of volume instead.

High shelf: Again, the opposite of a low shelf and equivalent to a low pass. Not much to say about it.

Peaking: Perhaps the most important of the EQ filters, this is used to boost or reduce a chosen frequency range. The range that I've boosted in the screenshot is a particularly special one; human ears are rather sensitive around the 5KHz range. If an instrument needs a little more presence in a song, it's not a bad idea to try boosting that range a little bit. Be warned that it can sound very piercing when overdone.

Band pass: Bit of an oddball here; this cuts all frequencies but the selected area.

Band stop: The final filter type in this particular plug-in. Band stop is used to cut out a frequency range entirely unlike peaking which has a -18dB limit. I've not found much use for this, personally; mainly for cutting out annoying harmonics and very thin noise. And speaking of thin, I should mention that another feature of this particular equalizer and many others is that you can adjust the width of a filter to suit your needs. For example, I can make the band stop extremely wide.

Though I'm almost certain I will never want to do this particular equalization, the width controls are very useful for precision. So, in conclusion, a simple word of advice: try to cut out frequencies before you decide to boost any. This is very common advice for the good reason that it helps things sound more natural. While the 5KHz boost is useful for example, you may find better results in certain circumstances by working to make a piece fit without it.

Compressors:

Not to be confused with data compression (such as the types used in mp3 and jpg files), this more commonly refers to dynamic range compressors. Dynamic range is how much difference there is between quiet and loud. Dynamic range compressors reduce this by pushing down the loud parts. This is primarily used to make excessively dynamic performances more steady or to give a part more "punch" as a byproduct of the compression process. Hearing compression applied to percussion should best demonstrate how it gives punch to hits. As with most kinds of audio processing, the extreme settings of the compressors are often abused and can make for weaker, crushed, unnatural sounds. This video demonstrates that well. The loudness war is typically something instigated in the mastering process, not mixing. A process called limiting where a compressor is used at a high ratio (more on ratios later) on the final mix is where the crushed sound comes from. Limiting is not an inherently evil thing and although I encourage you to learn how to use it while mastering, always be aware of the dangers. Now, to discuss the common settings of compressors.

Threshold: A setting going from ~-60dB to 0dB. It determines at which volume the compressor will begin acting on. If, for example, your instrument part is only reaching -20dB and you have the threshold set to -10dB, the compressor will do nothing regardless of what the other settings are.

Ratio: Determines how much compression will be applied. Wikipedia's example explains this best: "a ratio of 4:1 means that if input level is 4 dB over the threshold, the output signal level will be 1 dB over the threshold. The gain (level) has been reduced by 3 dB." Compressor settings range from 1:1 to a ratio around or above 30:1. When the ratio is infinity:1 or a very high setting that's effectively the same as infinity:1, it is known as limiting; limiting is used in the mastering process to achieve loudness and coherence. It is also useful while mixing to limit the master channel using a threshold of 0dB as this will prevent any very loud noises set up by mistake from damaging speakers and ears or simply shocking you.

Attack and release: These are used to determine how quickly the compression will take effect upon a sound passing the threshold and how quickly it will stop taking effect. Attack and release work by fading in and out the compression effect by setting a number in milliseconds. Having a slower attack helps preserve transients, the high volume beginning to a waveform. This diagram helps explain the significance of attack and release.

Gain: Since using compression reduces both volume and dynamic range, gain is a typical tool on compressors to compensate for the loss in volume. As usual, it's measured in dB.

Knee type: Used to add a variable element to the compression. A hard knee is the default type and doesn't affect the sound. A softer knee applies compression gradually as the sound comes closer to the threshold. For a more practical example, Joe Gilder has a good explanation.

Compressors are perhaps the most misunderstood and confusing tools for new producers. I suggest you become familiar with them early on as they are pretty important regardless of what music you're making. If everything else is going over your head, do your best to understand threshold and ratio as they are the most fundamental aspects of compression.

Delay:

As the name suggests, this process involves taking a sound and then playing it back again after a delay, usually multiple times in sequence. Delay plugins can get pretty complicated, so I'll keep this to the most simple of functions.

Time: This setting affects when each echo will play. Most digital delays have a time function based on the BPM of the project. These are displayed in an unusual way, in an x:yz format. For example, 4:00 is equivalent to having an echo play a crotchet beat after the initial sound while 2:00 plays the echoes twice as quickly. It's best to just hear these

Feedback mode: Decides how the echoes will operate on a stereo level. There are three basic types: normal, inverted and ping pong. The normal setting makes the echoes retain the stereo position of the orginal sound. In the example, the piano is 100% to the right, so on the normal setting, the echoes will all be 100% to the right as well. The inverted setting, however, makes the echoes the opposite of the original sound. Here, it means that the original sound will play at 100% to the right, but the echoes will all be 100% to the left. Finally, ping pong alternates between inverted and normal modes for each echo. In this case, the first echo will be 100% to the left, the second 100% to the right and so on.

Also in the feedback section is usually some parameters to control the amount of echoes and how quickly they fade out. This varies depending on the delay, but it should be rather simple to figure out. As usual, check the documentation if you're lost.

Wetness: More terminology to learn. Thankfully, wetness is rather simple; it is how much of a processed sound is applied. At 0% wetness, the delay effect will be silent. At 100%, the echoes will begin as loud as the initial sound. Wetness is a parameter you can control in virtually any plugin; any DAW worth its salt will let you adjust it in its interface somehow anway.

Now that we know how to control delay, exactly what is it used for? I'm sure you've heard it countless times in songs from the more subtle usage to the obnoxious. Delay is used to create texture with dynamics and also to suggest rhythm. With the ping pong and inverted settings, it can be used to help widen the stereo field without necessarily adding more instruments. Delay is also related to other effects such as reverb.

Additional software

FamiTracker: If you're unfamiliar with trackers, FamiTracker is a good choice to learn with. They are an alternative way of writing music, looking somewhat like a spreadsheet. Watching it in action should make it clear. This particular program's unique feature is that it's capable of exporting NSF (NES Sound Format) files and can be played back on a NES authentically.

Audacity: While technically a DAW, this is more of a program for quick editing, spectral analysis, encoding or recording. While there's likely no reason you should really need this if you have a full DAW, it is faster for many simple processes. Just don't try to mix or compose in it, trust me.

GNU Solfege: A small but powerful little tool for ear training exercises. If you want to improve your musical ear and have the patience for a bit of a routine, this is a good program to use.

Audacity: While technically a DAW, this is more of a program for quick editing, spectral analysis, encoding or recording. While there's likely no reason you should really need this if you have a full DAW, it is faster for many simple processes. Just don't try to mix or compose in it, trust me.

GNU Solfege: A small but powerful little tool for ear training exercises. If you want to improve your musical ear and have the patience for a bit of a routine, this is a good program to use.

Further reading

Mastering With Ozone: A clean and concise introduction to mastering in a modern environment and the principles behind the art. I recommend this even if you have no interest in using the iZotope Ozone suite as the information in the guide is universal.

musictheory.net Recommended by Mogsworth: For more practical theory, this is definitely a good choice for learning. The diagrams and step by step instructions are pretty clear from what I've seen.

SynthMania - Famous Sounds: Fairly large compilation of synthetic sounds that have become a bit cliché, focusing on stuff from the 70s to 90s. This list builds off an article appeared in the October 1995 issue of Keyboard magazine, titled "20 Sounds That Must Die" to give you an idea of the direction here. The audio examples are the selling point.

How Music Works: Bit of an odd book, falling somewhere between an autobiography, observational studies and contemporary musical history. This is something I would recommend more for someone who is already writing songs and wants to step out of their comfort zone and breathe in the diverse man that is David Byrne. If you're a fan of Talking Heads or David himself, you'll definitely get a little more out of this as it goes into the details of his creative process and recording habits.

The Manual (How to Have a Number One the Easy Way): Written by one-hit wonders The Timelords, famous for their song Doctorin' The Tardis, this book goes into the details of studio practices in the late 80s. It's written as a how to guide for those hellbent on getting that #1 goal. Good to read for some insight on that place in time musically.

Wikipedia: Yes, the grand Wikipedia is often a great source of information. Be warned that it can be rather relentless in its use of terminology, so have some patience or go to another source if the article you're reading is too needlessly complex for you. As such, it's not the best resource for beginners. Take the first sentence on the article for tonic for example: "In music, the tonic is the first scale degree of a diatonic scale and the tonal center or final resolution tone.". If you didn't have a musical background, I'm pretty sure you'd have to click all the blue links to understand what the hell you just read. However, its comprehensive nature and references to history are very helpful, so if the vocab doesn't put you off, I'd definitely recommend Wikipedia.

musictheory.net Recommended by Mogsworth: For more practical theory, this is definitely a good choice for learning. The diagrams and step by step instructions are pretty clear from what I've seen.

SynthMania - Famous Sounds: Fairly large compilation of synthetic sounds that have become a bit cliché, focusing on stuff from the 70s to 90s. This list builds off an article appeared in the October 1995 issue of Keyboard magazine, titled "20 Sounds That Must Die" to give you an idea of the direction here. The audio examples are the selling point.

How Music Works: Bit of an odd book, falling somewhere between an autobiography, observational studies and contemporary musical history. This is something I would recommend more for someone who is already writing songs and wants to step out of their comfort zone and breathe in the diverse man that is David Byrne. If you're a fan of Talking Heads or David himself, you'll definitely get a little more out of this as it goes into the details of his creative process and recording habits.

The Manual (How to Have a Number One the Easy Way): Written by one-hit wonders The Timelords, famous for their song Doctorin' The Tardis, this book goes into the details of studio practices in the late 80s. It's written as a how to guide for those hellbent on getting that #1 goal. Good to read for some insight on that place in time musically.

Wikipedia: Yes, the grand Wikipedia is often a great source of information. Be warned that it can be rather relentless in its use of terminology, so have some patience or go to another source if the article you're reading is too needlessly complex for you. As such, it's not the best resource for beginners. Take the first sentence on the article for tonic for example: "In music, the tonic is the first scale degree of a diatonic scale and the tonal center or final resolution tone.". If you didn't have a musical background, I'm pretty sure you'd have to click all the blue links to understand what the hell you just read. However, its comprehensive nature and references to history are very helpful, so if the vocab doesn't put you off, I'd definitely recommend Wikipedia.

Beatmatching for dummies (FL Studio)

First, pick your song; for the sake of simplicity, I suggest using a song with a distinct beat to practice. Drag and drop it into the playlist (F5) and zoom in as far as you can go. It should look something like this:

Now, we need to remove the silence. Get the pencil tool and click and drag the left side of the track so that only the very peak of the waveform remains. If your song has a long intro that lacks a distinctive beat, feel free to cut it all out now since you can add it back in later after timing. Anyway, ensure that you cut everything up to the peak, including the small waves that often appear before it.

This is wrong.

This is right (actually slightly early, but can be fine-tuned easily later). When cropping, hold alt to disable the snap and allow you to cut precisely instead of rhythmically. After doing that, drag the track all the way to the left like so and you've just completed the equivalent of timing the offset.

As for the BPM, turn on the metronome (alt+M) and, well, listen and adjust it accordingly. If you can't hear the metronome properly, double click the track and lower the volume in the sample window as necessary. The vast majority of songs use whole number BPMs, so you probably won't have to use decimal places. You can use the waveform as a guide to how close the BPM is if you're having trouble doing it by ear. For example:

The BPM is set too slow here.

The BPM is set too fast here. When the BPM is too low, the waves will peak before the bar, but they will peak after the bar if the BPM is too fast. Simple enough. Ballpark the BPM first, then listen to the beginning of the track and determine if it is too fast or too slow and adjust accordingly. Always stop the track and go back to the start when changing BPMs or else you won't have a decent frame of reference. Once you're fairly sure of your timing, seek through the track to ensure that the metronome is in time throughout.

And you're done! After that, write down the BPM, mute the track, drop your second song in and repeat the above steps to time it. After you've done that, you're ready to match them. Now, assuming the tracks are different BPMs, you have to pick which one to be sped up or slowed down to match the other. For my example, my second track is 117 BPM, while my first is 119 and I am going to speed up the second to 119. Open up the sample window of the track you want to change and adjust and right click the time knob.

It's this guy here. Select autodetect from the dropdown menu. Now, since we are not using the autodetect feature, click "Type in (BPM)" (fourth from the bottom I believe) and enter the sample's original tempo. Click accept and it will shift to the project BPM (the one at the top of FL Studio). By default, this will also affect the pitch of the sample. If you don't want this to happen, click on Resample and change it to a stretching method like pro default.

One last thing, after stretching and timing the songs, you should move them forward on the playlist by a bar and drag some of the stuff prior to the beat to prevent it from coming in suddenly and being all weird and artifacted. You can remove any actual silence later.

Once your songs are matched like this, you can use the alt dragging method you did before to alter their position in case they are slightly early or late aside from the BPM. That's pretty much all there is to basic timing in FL.

Now, we need to remove the silence. Get the pencil tool and click and drag the left side of the track so that only the very peak of the waveform remains. If your song has a long intro that lacks a distinctive beat, feel free to cut it all out now since you can add it back in later after timing. Anyway, ensure that you cut everything up to the peak, including the small waves that often appear before it.

This is wrong.

This is right (actually slightly early, but can be fine-tuned easily later). When cropping, hold alt to disable the snap and allow you to cut precisely instead of rhythmically. After doing that, drag the track all the way to the left like so and you've just completed the equivalent of timing the offset.

As for the BPM, turn on the metronome (alt+M) and, well, listen and adjust it accordingly. If you can't hear the metronome properly, double click the track and lower the volume in the sample window as necessary. The vast majority of songs use whole number BPMs, so you probably won't have to use decimal places. You can use the waveform as a guide to how close the BPM is if you're having trouble doing it by ear. For example:

The BPM is set too slow here.

The BPM is set too fast here. When the BPM is too low, the waves will peak before the bar, but they will peak after the bar if the BPM is too fast. Simple enough. Ballpark the BPM first, then listen to the beginning of the track and determine if it is too fast or too slow and adjust accordingly. Always stop the track and go back to the start when changing BPMs or else you won't have a decent frame of reference. Once you're fairly sure of your timing, seek through the track to ensure that the metronome is in time throughout.

And you're done! After that, write down the BPM, mute the track, drop your second song in and repeat the above steps to time it. After you've done that, you're ready to match them. Now, assuming the tracks are different BPMs, you have to pick which one to be sped up or slowed down to match the other. For my example, my second track is 117 BPM, while my first is 119 and I am going to speed up the second to 119. Open up the sample window of the track you want to change and adjust and right click the time knob.

It's this guy here. Select autodetect from the dropdown menu. Now, since we are not using the autodetect feature, click "Type in (BPM)" (fourth from the bottom I believe) and enter the sample's original tempo. Click accept and it will shift to the project BPM (the one at the top of FL Studio). By default, this will also affect the pitch of the sample. If you don't want this to happen, click on Resample and change it to a stretching method like pro default.

One last thing, after stretching and timing the songs, you should move them forward on the playlist by a bar and drag some of the stuff prior to the beat to prevent it from coming in suddenly and being all weird and artifacted. You can remove any actual silence later.

Once your songs are matched like this, you can use the alt dragging method you did before to alter their position in case they are slightly early or late aside from the BPM. That's pretty much all there is to basic timing in FL.

Update Log:

22/03/13 - Added mixing 101, further reading and additional software sections.

23/03/13 - Added GNU Solfege to the additional software section, added compressors and delay to the mixing 101 section.

24/11/13 - Added beatmatching for dummies